Load Balancing 101

Get latest articles directly in your inbox

In today’s world, businesses want to deliver best user experience to their customers. Most applications with large userbase need to serve millions of request in efficient manner. Just having a single server is not enough to ensure high availability and reliability. Most organizations usually have multiple servers to handle load on their applications. Load balancing is an important component in reliable handling of customer requests even when one or more servers are impacted due to some reason.

Preparing for Interviews? I highly recommend Mastering System Design course on Educative.

What is Load Balancing?

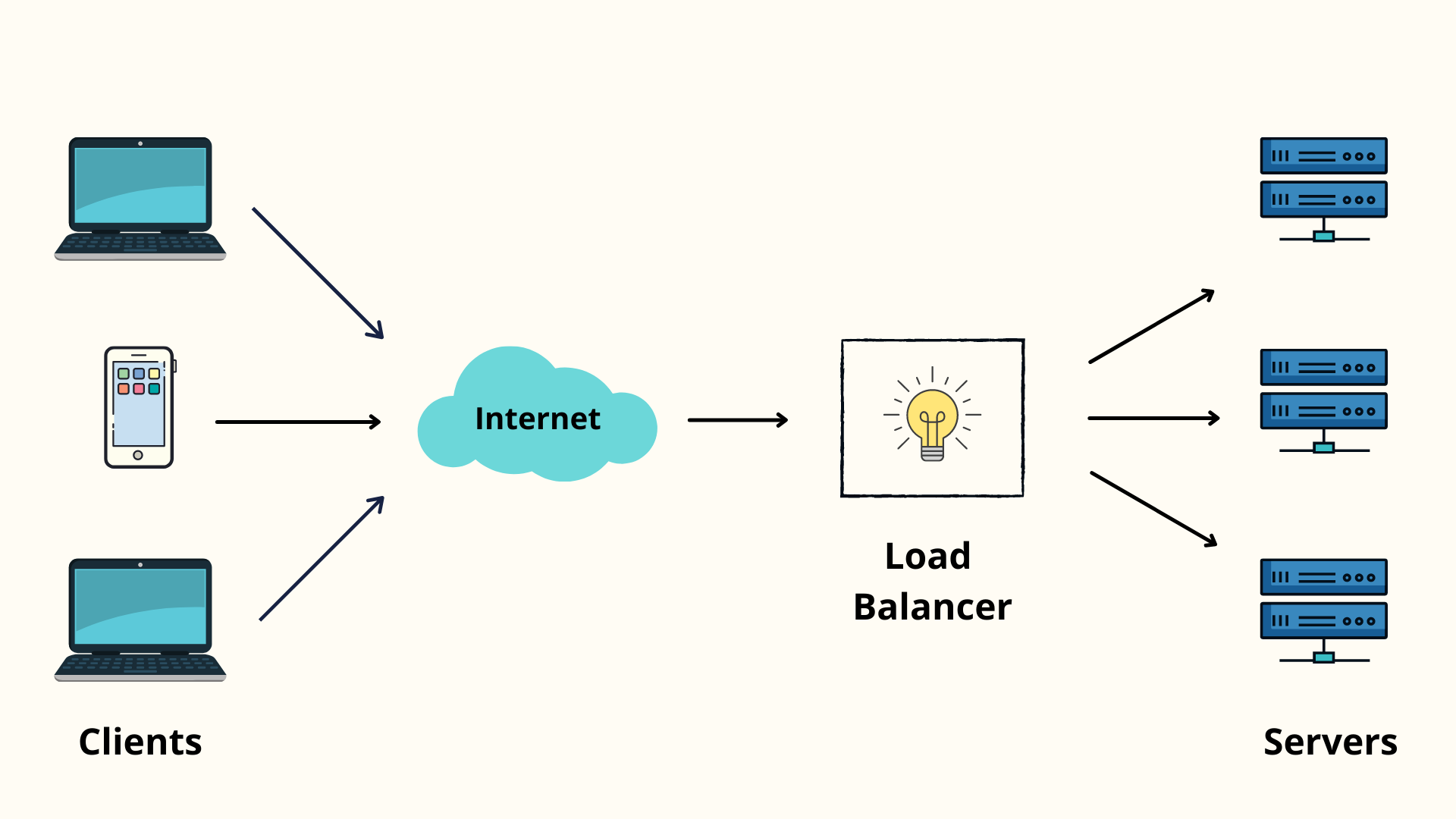

Load balancing refers to process of efficiently distributing incoming network traffic across multiple servers. The main task of load balancer is to ensure that no single server is flooded with too many request. This enhances responsiveness and increases availability of applications for users.

You can think of load balancer as a middle man that manages the flow of information between the servers and an endpoint device (mobile apps, websites, etc). Load balancers conduct continuous health checks on servers to ensure they can handle requests. It can also remove unhealthy servers from the pool until they are restored.

Let’s deep dive into the working of a Load Balancer.

How load balancing works

As mentioned above, the load balancer (LB) sits in between server and client. Let’s see how a load balancer processes request through a basic flow.

- Client makes a request to server.

- The request arrives at load balancer and it accepts the request.

- It decides which host should receive this request based on a set of parameters. LB then updates the destination IP (and possibly port) to the service of the selected host.

- LB performs a bi-directional NAT (Network Address Translation) to update the destination IP

- The host accepts the connection and responds back to LB.

- Load balancer intercepts the response packet from host (server) and now changes the source IP to client.

- The client finally receives the return packet from LB.

Smart selection

Awesome. We just learned how a request is processed. This looks simple right? True, but you might think how does the Load Balancer smartly determines which host can handle request in best way. There are a couple of Load Balancing algorithms like Round robin, Least response time, etc. that help in choosing the right host (server) based on a number of parameters. The load balancers maintains a list of hosts (i.e pool) to choose from and the algorithm returns best host for each request. (exception in case of multi-part request).

Some common parameters for selecting a host include -

- CPU utilization

- Number of active connections

- Response time

- Application specific data such as HTTP headers, cookies, or even data within the application message.

Health Monitoring

Assuming we selected the host based on our smart algorithm. But what if the host is unresponsive or the server crashed? Our request would fail in that case! Most load balancers handle this using health monitoring to determine whether a host is in working state or not. A very basic monitoring technique is to just make a ping request to the host. If the host does not respond to the ping, we can assume that any services defined on the host are probably down. If a host is down, then we need to update the list of available host to prevent this host being selected.

There are smarter health monitors that make service pings since pinging host is not sufficient to determine whether service is working or not.

Load Balancing Algorithms

There are multiple algorithms that are used for load balancing based on number of connections, server response time, IP addresses, etc.

Least Connection Method

As the name suggests, the load balancer directs traffic to the server with the least number of active connections. The idea is to distribute the request evenly across all servers.

Least Response Time Method

In this method the traffic is directed to the server with least active connections and the lowest average response time. This method ensures quick response time for end clients.

Round Robin Method

One of the most commonly used methods. In this the load balancer maintains a queue of server. It rotates servers by directing traffic to the first available server and then moves that server to the bottom of the queue. There are two types of Round Robin – Weighted Round Robin and Dynamic Round Robin

Source and Destination IP Hash Method

The server is selected based on a unique hash key. The Hash key is generated by taking the source and destination IP address of the request that determines which server will receive the request.

Types of Load Balancing

There are multiple types of load balancers that function based on different data, locations, etc.

Layer 4 vs Layer 7 Load Balancers

- Layer 4 — it directs traffic based on data from network and transport layer protocols. Common layer 4 load balancers use IP address and ports to direct traffic.

- Layer 7 — adds content switching to load balancing. This allows routing decisions based on attributes like HTTP header, uniform resource identifier, SSL session ID and HTML form data.

Global vs DNS Load Balancers

- GSLB — Global Server Load Balancing extends L4 and L7 capabilities to servers in different geographic locations.

- DNS - In this we configure a domain in DNS that serves to client request. Eg. If you need admin data, DNS load balancer would direct all admin request to specific domain defined in DNS.

Hardware vs Software Load Balancers

- Software - these are softwares installed on cloud that act as a load balancer. There are no physical device thus it’s cheaper.

- Hardware - also known as Application Delivery Controller, this is a physical device which is costier but they come with vast set of advanced features. Eg. F5 hardware load balancer.

Why do we need Load Balancing?

- Enhance user experience by providing low response time and high availability.

- Prevents server overloads and crashes.

- Security - The off-loading function of a load balancer defends an organization against distributed denial-of-service (DDoS) attacks.

- Build flexible networks that can meet new challenges without compromising security, service or performance.

- Analytics from Load balancer data that help you in determining any traffic bottlenecks in your infra.

- High availability even if one or more servers go down due to some reason. Load balancers can even spawn new boxes and start serving load on them.

- Flexibility to add or subtract servers based on demand. This essentially means organizations can save on server costs during periods of low traffic.

Resources

- What is Load balancing?

- Working of load balancer

- Book: Designing Data Intensive Applications

- Book: Clean Architecture

I hope you learned something new. Feel free to suggest improvements ✔️

I share regular updates and resources on Twitter. Let’s connect!

Keep exploring 🔎 Keep learning 🚀

Liked the content? Do support :)